Today, as every day, I was bombarded with articles to read. You, too?

Today, Testing for the Masses: More affordable assessment services reveal a new focus on application security caught my eye.

As some of my readers may know, I’ve spent a lot of time and effort on re-creating the customer web application security testing viewpoint. And, of course, Markus and I often don’t agree. So, I trundled off to read what he’d opined on the subject.

Actually, it’s great to see that ideas that were bleeding edge a few years ago are now being championed in the press!

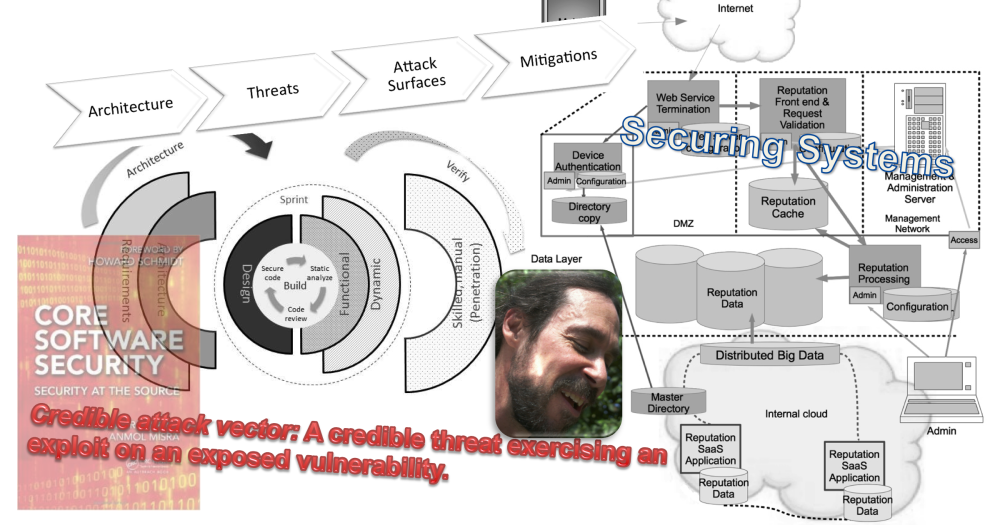

* Build security in the design

* Code securely

* Verify both the design and the code

The “design” part of the problem requires reasonable maturity of a security architecture practice.

And, web application code must be tested and fixed before deployment, just as this article suggests. All good. If we could achieve these goals on a broad scale it would be a much better world that what we’ve got, which has been to deploy more than 100 millon lines of vulnerable code to the web (see Jeremiah Grossman’s white papers on this topic)

Further, the writers suggested that price points of web application testing tools must fall. And here’s where the ball got dropped. Why?

The question of price is related to one of the main limitations on getting at least the simple and egregious bugs out of our web code.

The tool set has until recently been made for and focused to people like Markus Ranum – security experts, penetration testing gurus. These tools have emphasized broad coverage and deep analysis. And that emphasis has been very much at the expense of:

* tool cost

* tool complexity

* noisy results that must be hand qualified by an expert

There aren’t that many Markus Ranum’s in the world. In fact, as far as I know, only one! Seriously, there are not that many experienced web application vulnerability analysts. My estimate of approximately 5000 was knocked down by some pundit (I don’t remember who?) at a SANs conference at which I was speaking. His guesstimate? 1500. Sigh. Imagine how many lines of code each has to analyze to even make a dent in those 100 million lines deployed.

Each of the major web application vulnerability scanners assumed that the user was going to become an expert user in the tool. User interfaces are complex. Setting the scope and test suite are a seriously non-trivial exercise.

And finally, there is the noise in the results – all those false positives. These tools all assume that the results will be analyzed by a security expert.

Taken together, we have what I call the “lint problem”. For those who remember programming in the C language, there is a terrifically powerful analyzer called “lint”. This tool can work through a body of code and find all kinds of additional errors that the compiler will miss. Why doesn’t everyone use lint, then? Actually, most C programmers never use lint.

Lint is too hard and resource intensive to tune. For projects that are more complex than one of two source files, the cost of tuning lint far out weighs it’s value. So, only a few programmers ever used the tool. On a 3 month project, just about the time the project is over is when lint is finally returning just the useful results. Not very timely, I’m afraid.

And so, custom web application vulnerability testing has remained in the hands of experts, people like Markus Ranum, who, indeed, makes his living by reviewing and fixing code (and rumour has it, a very good living, indeed).

So, of course, Markus probably isn’t going to suggest the one movement that will actually bring a significant shift this problem.

But I will.

The vulnerability check must be put into the hands of the developer! Radical idea?

And, that check can’t be a noisy, expert driven analysis.

* The scan must be no more difficult to run than a compiler or linker. (which are, after all, the tools in use by many web developers every day)

* The scan should integrated seamlessly into the existing workflow

* The scan must not add a significant time to the developer’s workflow

* The results must be easy to interpret

* The results must be as accurate as a compiler. E.g., the developer must be able to trust the results with very high confidence

Attack these bugs at the source. And, to achieve that, give the developer a tool set that s/he can use and trust.

And, the price point has to be in accordance with other, similar tools.

I happen to know of several organizations that have either experimented with this concept or have put programs into place based on these principles (can’t name them, sorry. NDA) And guess what? It works!

Hey, toolmakers, the web developer market is huge! There’s money to be made here, folks.

Markus, I think you missed a key point, and are pointing in the wrong direction (slightly)

cheers

/brook