I was attending the 2nd SANS What Works in Security Architecture Summit, listening to Alan Paller, Director of Research at the SANS Institute, and Michele Guel, National Cybersecurity Award winner. Alan has given us all a call to action, namely, to build a collection of architectural solutions for security architecture practitioners. The body of work would be akin to Up To Date for medical clinicians.

I believe that creating these guides is one of the next critical steps for security architects as a profession. Why?

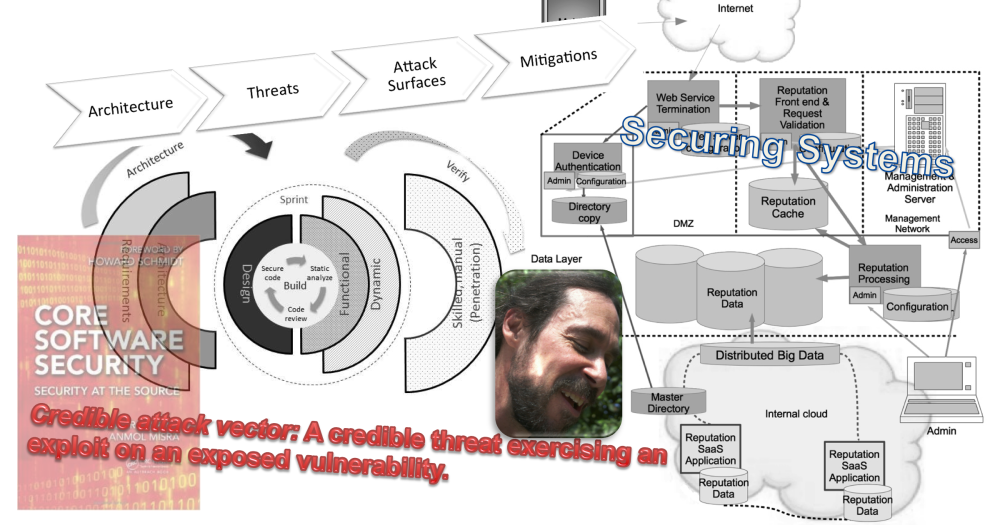

It’s my contention that most experienced practioners are carrying a bundle of solution patterns in their heads. Like a doctor, we can eyeball a system, view the right type of system diagram, get a few basic pieces of information, and then begin very quickly to assess which of those patterns may be applicable.

In assessing a system for security, of course, it is often like peeling an onion. More and more issues get uncovered as the details are uncovered. Still, I’ve watched experienced architects very quickly zero in to the most relevant questions. They’ve seen so many systems that they intuitively understand where to dig deeper in order to assess attack vectors and what is not important.

One of the reasons this can happen so quickly is almost entirely local to each practitioner’s environment. Architects must know their environments intimately. That local knowledge will eliminate irrelevant threats and attack patterns. Plus, the architect also knows which security controls are pre-existing in supporting systems. These controls have already been vetted.

Along with the local knowledge, the experienced practioner will have a sense of the organization’s risk posture and security goals. These will highlight not only threats and classes of vulnerabilities, but also the value of assets under consideration.

Less obvious, a good security architecture practitioner brings a set of practical solutions that are tested, tried, and true that can be applied to the analysis.

As a discipline, I don’t think we’ve been particularly good at building a collective knowledge base. There is no extant body of solutions to which a practioner can turn. It’s strictly the “school of hard knocks”; one learns by doing, by making mistakes, by missing important pieces, by internalizing the local solution set, and hopefully, through peer review, if available.

But what happens when a lone practioner is confronted with a new situation or technology area with which he or she is unfamiliar? Without strong peer review and mentorship, to where does the architect turn?

Today, there is nothing at the right level.

One can research protocols, vendors, technical details. Once I’ve done my research, I usually have to infer the architectural patterns from these sources.

And, have you tried getting a vendor to convey a product’s architecture? Reviewing a vendor’s architecture is often frought with difficulties: salespeople usually don’t know, and sales engineers just want to make the product work, typically as a cookie cutter recipe. How many times have I heard, “but no other customer requires this!”

There just aren’t many documented architectural patterns available. And, of the little that is available, most has not been vetted for quality and proof.

Alan Paller is calling on us to set down our solutions. SANS’ new Smart Guide series was created for this purpose. These are intended to be short, concise security architecture solutions that can be understood quickly. These won’t be white papers nor the results of research. The solutions must demonstrate a proven track record.

There are two tasks that must be accomplished for the Smart Guide series to be successful.

- Authors need to write down the architectures of their successes

- The series will need reviewers to vet guides so that we can rely on the solutions

I think that the Smart Guides are one of the key steps that will help us all mature security architecture practice. From the current state of Art (often based upon personality) we will force ourselves to:

- Be succinct

- Be understandable to a broad range of readers and experience

- Be methodical

- Set down all the steps required to implement – especially considering local assumptions that may not be true for others

- Eliminate excess

And, by vetting these guides for worthwhile content, we should begin to deliver more consistency in the practice. I also think that we may discover that there’s a good deal of consensus in what we do every day**. Smart Guides have to juried by a body of peers in order for us to trust the content.

It’s a Call to Action. Let’s build a respository of best practice. Are you willing to answer the call?

cheers

/brook

** Simply getting together with a fair sampling of people who do what I do makes me believe that there is a fair amount of consensus. But that’s entirely my anecdotal experience in the absence of any better data