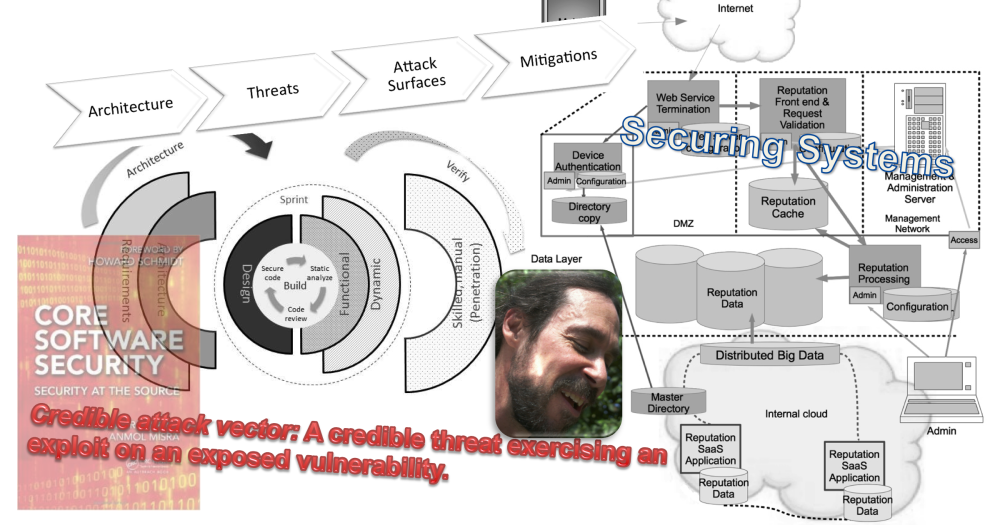

My friend and colleague, Dr. James Ransome, invited me late last Winter to write a chapter for his 10th book on computer security, Core Software Security, (with co-author, Anmol Misra) published by CRC Press. My chapter is “The SDL In The Real World”, SDL = “Secure Development Lifecycle”. The book was released December 9, 2013. You can get copies from the usual sources (no adverts here, as always).

It was an exciting process. James and I spent hours white boarding possible SDL approaches, which was very fun, indeed*. We collectively challenged ourselves to uncover current SDL assumptions, poke at the validity of these, and find better approaches, if possible.

Many of you already know that I’ve been working towards a different approach to the very difficult, multi-dimensional and multi-variate problem of designing and implementing secure software for a rather long time. Some of my earlier work has been presented to the industry on a regular basis.

Specifically, during the period of 2007-9, I talked about a new (then) approach to security verification that would be easy for developers to integrate into their workflow and which wouldn’t require a deep understanding of security vulnerabilities nor of security testing. At the time, this approach was a radical departure.

The proving ground for these ideas was my program at Cisco, Baseline Application Vulnerability Assessment, or BAVA, for short (“my” here does not exclude the many people who contributed greatly to BAVA’s structure and success. But it was more or less my idea and I was the technical leader for the program).

But, is ease and simplicity all that’s necessary? By now, many vendors have jumped on the bandwagon; BAVA’s tenets are hardly even newsworthy at this point**. Still, the dream has not been realized, as far as I can see. Vulnerability scanning still suffers from a slew of impediments from a developer’s view:

- Results count vulnerabilities not software errors

- Results are noisy, often many variations of a single error are reported uniquely

- Tools are hard to set up

- Tools require considerable tool knowledge and experience, too much for developers’ highly over-subscribed days

- Qualification of results requires more in-depth security knowledge than even senior developers generally have (much less an average developer)

And that’s just the tool side of the problem. What about architecture and design? What about building security in during iterative, fast paced, and fast changing agile development practices? How about continuous integration?

As I was writing my chapter, something crystalized. I named it, “developer-centric security”, which then managed to get wrapped into the press release and marketing materials of the book. Think about this: how does the security picture change if we re-shape what we do by taking the developer’s perspective rather than a security person’s?

Developer-centric software security then reduced to single, pointed question:

What am I doing to enable developers to innovate securely while they are designing and writing software?

Software development remains a creative and innovative activity. But so often, we on the security side try to put the brakes on innovation in favour of security. Policies, standards, etc., all try to set out the rules by which software should be produced. From an innovator’s view at least some of the time, developers are iterating through solutions to a new problem while searching for the best way to solve it. How might security folk enable that process? That’s the question I started to ask myself.

Enabling creativity, thinking like a developer, while integrating into her or his workflow is the essence of developer-centric security. Trust and verify. (I think we have to get rid of that old “but”)

Like all published works, the book represents a point-in-time. My thinking has accelerated since the chapter was completed. Write me if you’re intrigued, if you’d like more about developer-centric security.

Have a great day wherever you happen to be on this spinning orb we call home, Earth.

cheers,

/brook

*Several of the intermediate diagrams boggle in complexity and their busy quality. Like much software development, we had to work iteratively. Intermediate ideas grew and shifted as we worked. a creative process?

**At the time, after hearing BAVA’s requirements, one vendor told me, “I’ll call you back next year.”. Six months later on a vendor webcast, that same vendor was extolling the very tenets that I’d given them earlier. Sea change?