On this page are some of Brook’s original contributions to security practice.

I don’t mean to take full credit for the successes listed here. Many people contributed to these concepts and without whom none of these ideas would have seen the light of day, or, more importantly become running programs. (None of the foregoing is meant to imply that someone else may not have had the same idea at another time and place. If so, I’m simply not aware of it)

Brook originated the following1:

• Developer run, decentralized web application vulnerability scanning.

In late 2004, Greg Giles and I spent 3 months trying to figure a way to get 2500 web applications vulnerability scanned. Out of those discussions, I hit upon the idea of creating a scan that produced very high confidence results (even if for a protracted set of vulnerabilities). The scan should fit easily within typical application development work flow. The interface had to be dead simple and require less than 15 minutes of training. The results should clearly point to the cause of the errors. The technology had to be easily managed for a population of 5000 web developers. Arguments about false positives needed to be forestalled by the high confidence in findings.

5 major application scanning vendors and 1 (new) static analysis vendor were given the high level requirements during the investigation period. Only one of these had been thinking along similar lines; that vendor won the contract. One vendor said, “that’s not a useful direction” and another told me, “we’ll get back to you next year”. The original program was called, Baseline Application Vulnerability Assessment (BAVA) and continues to run successfully today. Vinay Bansal and Ferris Jabri had a huge hand in making the program successful.

Interestingly, 8 months after we’d started the BAVA program, I attended a webcast, at which the CTOs from 3 of the web scanner companies we’d approached were extolling developer run scanning. Hmmm?

• Developer-centric security; “trust AND verify”

“Developer-centric security” started with the BAVA program in 2005/6. I did not think of the term until 2013. The concept has matured through many experiments and revisions to its current form, best expressed in my Chapter, “The SDL In The Real World”, in Core Software Security by Dr. James Ransome and Anmol Misra, 2014. Always in refinement, the following question best sums up the principles of developer-centric security:

“How am I enabling developers to be creative and innovative, securely?“

The basic idea behind developer-centric security is that trying something new engenders mistakes and errors. Bugs and/or design flaws are inevitable. Security ought help to find the errors, ought to help refine designs, rather than wagging a nagging finger about having made mistakes.

For instance, imagine if security code analyzers ran “like a compiler”. Imagine that the results could be trusted like those from an industrial-grade compiler, that is the results had very high confidence. And, imagine if security analysis was trivially easy to integrate into the developer’s workflow. That would be a start and a fairly radical departure from the tools of today (though, as of this writing, some vendors are finally reaching towards this ideal).

• Agile secure development methodology focusing on deep engagement. This was co-created with Dr. James Ransome, Harold Toomey, and Noopur Davis

• Teams of empowered, decentralized “partner” security architects spread across a development organization and integrated into delivery teams. Certainly, a few organizations have had “security champion” programs. These are not the same thing, at all. The original idea here was to train, empower, mentor, coach, and create a community of security architects by capitalizing on the interest and skills of an existing system/software architecture practice.

I originally pitched the concept to Michele Koblas in 2002 or perhaps it was early 2003? – Ferris Jabri program managed the first successful team with me and Cisco Infosec’s “Web Arch” team. Kudos go to Michele (originally), Nasrin Rezai, and John Stewart for strongly supporting the original experiment. Enterprise Architecture kept telling us that we had to wait. Ferris literally said, “We’re just going to do it, Brook.” We then proved the concept admirably. Thanks for your fine leadership, Ferris.

A key differentiator is empowering each virtual team member as a formal part of the security organization. That is, each member has the policy powers for security and must perform the due diligence role typically reserved to Infosec. These are not simply “red flag” spotters, but fully functional security architects. Such a program has to accompany the empowerment with training, mentoring, continued coaching, and support. In fact, without ongoing support and air cover for hard prioritization and risk decisions, the virtual team will ultimately fail. Another key difference is the creation of a community of support amongst the partner team. Participation is a critical factor, as well as an inducement to perform the work (beyond having “security” on one’s resume)

Some of the key programmatic points are explained in Chapter 13 of Securing Systems

• JGERR Just Good Enough Risk Rating – working model was created with Vinay Bansal (An ISA Smart Guide was completed and edited in 2011, but SANS Institute hasn’t published it).

JGERR is descended from “Rapid Risk” by Rakesh Bharania and Catherine Blackader Nelson – theirs was the original idea to calculate risk scores with a spreadsheet of security questions. Though by now, the coupling is quite loose to how JGERR collects values and how risks are actually calculated.

JGERR is heavily indebted to Jack Jones’ FAIR methodology.

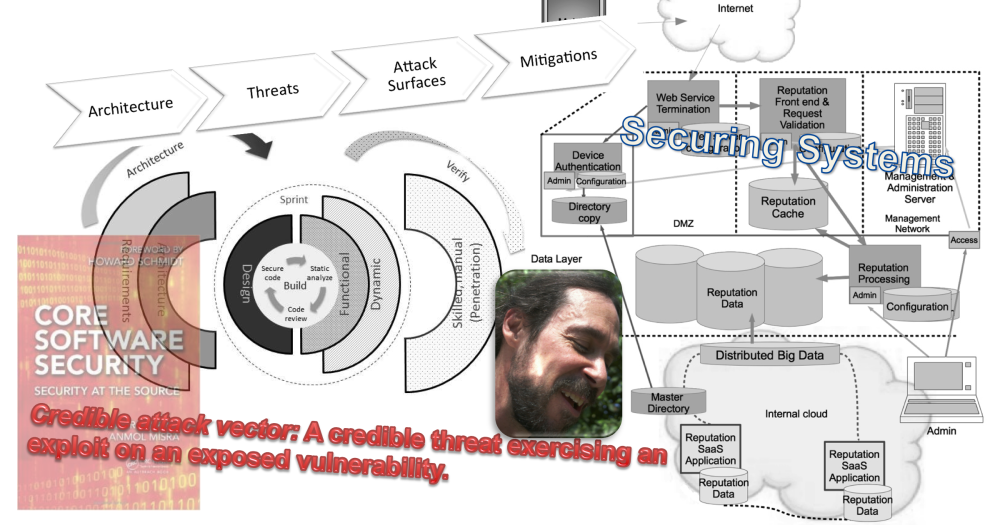

JGERR is based upon the concept of “credible attack vector” (CAV), which treats each of the terms that has to come together for a successful exploit as essentially boolean. CAV is then substituted for the probability term in a risk calculation. Rating of impacts takes the place of annualized loss.

Key concepts of JGERR are explained in Chapter 4 of Securing Systems

• Software security architecture principles

- Be as free as humanly possible from errors that can be manipulated intentionally, ergo, be free of vulnerabilities that can harm customers or the brand

- Have those security features that customers require for their intended use cases

- Be self-protective; resist the types of attacks that will be promulgated against the software

- “Fail well” in the event of a failure. That is, fail in such a manner as to minimize consequences of successful attack

- Install with sensible, “closed” defaults

• Security architecture review process “Architecture, Threats, Attack Surfaces, Mitigations” (ATASM)

This concept has become both a book and a participatory threat modeling pedagogy: Securing Systems

• SaaS, multi-tenant, shared application, double-indirection data enveloping. This concept is based upon work from WebEx Engineering. My original idea is to double the indirection with the addition of cryptographic techniques for non-repudiation and non-predictability.

• An architecture pattern that securely allows untrusted traffic to be processed by internal applications which were not designed to process untrusted traffic – Vinay Bansal architected the first working example. ISA Smart Guide published in 2011 by the SANS Institute (apparently now out of print).

• An early set of technical criteria for measuring 3rd party vendors’ security posture. Originally published by Cisco in 2005. Subsequently, Vinal Bansal and I prepared an ISA Smart Guide for the SANS Institute. Apparently, that guide is no longer available.

Other’s original ideas to which Brook has contributed:

• Security Knowledge Empowerment training (2011 National Cyber Security Award to Michele Guel)

• Secure Agile Development with Noopur Davis, Harold Toomey, and Dr. James Ransome. Watch for a white paper in the near future

- “Original” as far as I know.