Reading a recent article, Five Common Cloud Security Threats and Data Breaches“, posted by Andreas Dann I came across some advice for APIs that is worth exploring.

The table stakes (basic) security measures that Mr. Dann suggests are a must. I absolutely support each of the recommendations given in the article. In addition to Mr. Dann’s recommendations, I want to explore the security needs of highly exposed inputs like internet-available APIs.

Again and again, I see recommendations like Mr. Dann’s: “…all APIs must be protected by adequate means of authentication and authorization”. Furthermore, I have been to countless threat modelling sessions where the developers place great reliance on authorization, often sole reliance (unfortunately, as we shall see.)

For API’s with large open source, public access, or which involve relatively diverse customer bases, authentication and authorization will be insufficient.

As True Positives‘ AppSec Assurance Strategist, I’d be remiss to misunderstand just what protection, if any, controls like authentication and authorization provide in each particular context.

For API’s essential context can be found in something else Mr. Dann states, “APIs…act as the front door of the system, they are attacked and scanned continuously.” One of the only certainties we have in digital security is that any IP address routable from the internet will be poked and prodded by attackers. Period.

That’s because, as Mr. Dann notes, the entire internet address space is continuously scanned for weaknesses by adversaries. Many adversaries, constantly. This has been true for a very long time.

So, we authenticate to ensure that entities who have no business accessing our systems don’t. Then, we authorize to ensure that entities can only perform those actions that they should and cannot perform actions which they mustn’t. That should do it, right?

Only if you can convince yourself that your authorized user base doesn’t include adversaries. There lies “the rub”, as it were. Only systems that can verify the trust level of authorized entities have that luxury. There actually aren’t very many cases involving relatively assured user trust.

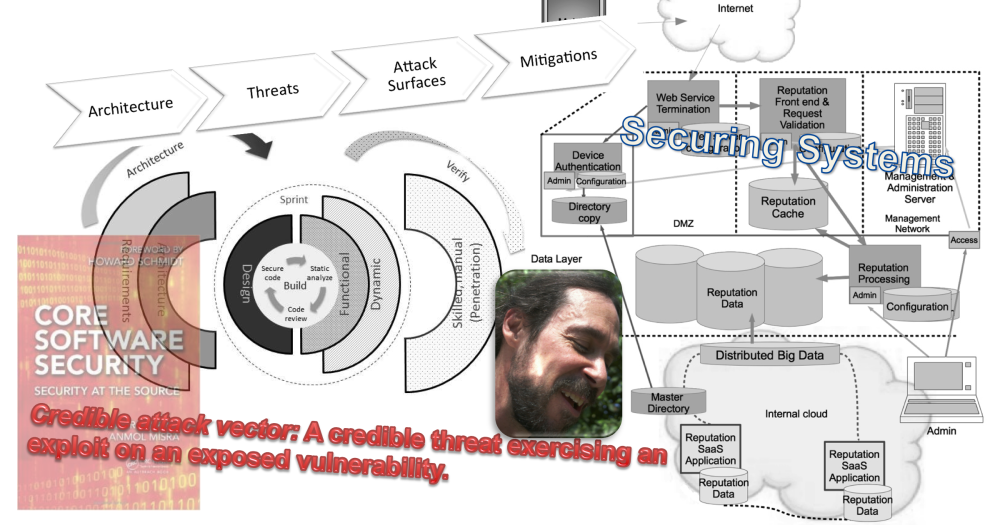

As I explain in Securing Systems, Secrets Of A Cyber Security Architect, and Building In Security At Agile Speed, many common user bases will include attackers. That means that we must go further than authentication and authorization, as Mr. Dann implies with, “…the API of each system must follow established security guidelines”. Mr. Dann does not expand on his statement, so I will attempt to provide some detail.

How do attackers gain access?

For social media and fremium APIs (and services, for that matter), accounts are given away to any legal email address. One has only to glance at the number of cats, dogs, and birds who have Facebook accounts to see how trivial it is to get an account. I’ve never met a dog who actually uses, or is even interested in Facebook. Maybe there’s a (brilliant?) parrot somewhere? (I somehow doubt it.)

Retail browsing is essentially the same. A shop needs customers; all parties are invited. Even if a credit card is required for opening an account, which used to be the norm, but isn’t so much anymore, stolen credit cards are cheaply and readily available for account creation.

Businesses which require payment still don’t get a pass. The following scenario is from a real-world system for which I was responsible.

Customers paid for cloud processing. There were millions of customers, among which were numerous nation-state governments whose processing often included sensitive data. It would be wrong to assume that nation-states with spy agencies could not afford to purchase a processing account in order to poke and prod just in case a bug should allow that state to get at the sensitive data items of one of its adversaries. I believe (strongly) that it is a mistake to assume that well-funded adversaries won’t simply purchase a license or subscription if the resulting payoff is sufficient. spy agencies often work very slowly and tenaciously, often waiting years for an opportunity.

Hence, we must assume that our adversaries are authenticated and authorized. That should be enough, right? Authorization should prevent misuse. But does it?

All software fails. Rule #1, including our hard won and carefully built authorization schemes.

“[E]stablished security guidelines” should expand to a robust and rigorous Security Development Lifecyle (SDL or S-SDLC), as James Ransome and I lay out in Building In Security At Agile Speed. There’s a lot of work involved in “established security”, or perhaps better termed, “consensus, industry standard software security”. Among software security experts, there is a tremendous amount of agreement in what “robust and rigorous” software security entails.

(Please see NIST’s Secure Software Development Framework (SSDF) which is quite close to and validates the industry standard SDL in our latest book. I’ll note that our SDL includes operational security. I would guess that Mr. Dann was including typical administrative and operational controls.)

Most importantly, for exposed interfaces, I continue to recommend regular, or better, continual fuzz testing.

The art of software is constraining software behaviour to the desired and expected. Any unexpected behaviour is probably unintended and thus, will be considered a bug. Fuzzing is an excellent way to find unintended programme behaviour. Fuzzing tools, both open source and commercial, have gained maturity while at the same time, commercial tools’ price has been decreasing. The barriers to fuzzing are finally disappearing.

Rest assured that you probably have authorized adversaries amongst your users. That doesn’t imply that we abandon authentication and authorization. These controls continue to provide useful functions, if only to tie bad behaviour to entities.

Along with authentication and authorization, we must monitor for anomalous and abusive behavour for the inevitable failures of our protective software. At the same time, we must do all we can to remove issues before release, while at the same time, using techniques like fuzzing to identify those unknown, unknowns that will leak through.

Cheers,

/brook