Two days, about 20 speakers, all the major tool makers, pundits, researchers, penetration testers, this summit was almost a who’s who of application security. SANS wanted to kick-off their new GIAC application coding certification. And, along with that, they wanted to take a pulse of the industry. I was privileged to be included on a couple of panels – there were frighteningly smart people speaking – country mouse playing with the city cats, definitely!

A couple of my colleagues sat for the exam. I once held GIAC Intrusion Detection certification (#104?) but I let it lapse. (It’s not really relevant to what I do now) My buddies said it was a reasonable exam. The exam covered implementing security controls from the language APIs (java – JAAS calls) and coding securely.

The larger issue is what can we make out of this certification? How much effect will it have? Will getting coders certified change the landscape significantly?

There was plenty of FUD from the toolmakers. Yes, the entire web is vulnerable, apparently. Ugh! You probably knew that already, huh?

The numbers of applications out there is staggering. Estimates were running in the 100 millions. That’s a lot of vulnerable code. And, considering that my work’s DMZ takes a 6 million attack pounding every 24 hours, it’s not too far a stretch to assume that at least a few of those attacks are getting through. We only know about the incidents that are reported or that cause damage (as pointed out in the Cenzic report released at the Summit)

And, it seems like the financial incentives for exploiting vulnerabilities are maturing? (a google search will reveal dozens of financially motivated hack reports) If so, cash incentives will likely increase the number of incidents for all of us. Another big sigh.

One of the most interesting speakers to me at the conference was Dinis Cruz, CTO of Ounce Labs. A couple of times, he pointed out that while yes, we are being hacked, there’s no blood on the floor. Nobody’s been killed from a web hack (thank goodness!) We (inductries that use the web heavily) are losing money doing damage control, making up losses (especially, the financial industry), and doing remediations. Absolutely true.

But has anyone done an analysis of what the risk picture looks like? Is web exposure worth it? Are we making more than we’re losing? So much more that, like actuaries, the risks are worth it?

I know this question may seem crazy for someone from inside the information security industry to ask? But, anyone who knows me, knows that I like to ask the questions that aren’t being asked, that perhaps might even be taboo? We security folk often focus on reducing risk until we feel comfortable. That “warm and fuzzy”. Risk is “bad”, and must be reduced. Well, yeah. I fall into that trap all too often, myself.

It’s important when assessing a system to bring its risk down to the “usual and acceptable” levels of practice and custom of one’s organization. That’s at least part of my daily job.

But appropriate business risk always includes space for losses. What’s the ratio? Dinis definitely set me to considering the larger picture.

My work organization takes in more than 90% of its revenue through web sites. As long as loss is within tolerable limits, we should be ok, right? The problem with this statement comes with a few special classes of data compromise. These can’t be ignored quite so easily (or, the risk picture needs to be calculated more wholistically)

• Privacy laws are getting tougher. How does one actually inform each of 38 States Attorneys-General first? And, the Japan law makes this problem seem trivial.

• Privacy breaches are really hard on customer goodwill. Ahem

• Internet savvy folks are afraid of identity theft (again, a personally identifying information (PII) issue). The aftermath from identity theft can last for years and go way beyond the $50 credit card loss limit that makes consumer web commerce run.

I’ve got friends working for other companies that are dealing with the fall-out from these sorts of breaches. It ain’t pretty. It’s bad for organizations. The fall-out sometimes dwarfs the asset losses.

And, if I think about it, about all the vulnerabilities out there, are we each just one SQL injection exposure away from the same? Forget the laptop on the car seat. PII is sitting on organization database servers that are being queried by vulnerable web applications.

Considering this possibility brings me back to the importance of application security. Yes, I think we have to keep working on it.

And, our tool set is immature. As I see it, the industry is highly dependent upon people like Dinis Cruz to review our code and to analyze our running applications. I don’t think Dinis is a cheap date!

So, what to do?

I’ll offer what John Chambers, CEO of Cisco Systems, Inc., told me. (no, I don’t know him. I happened be in the same room and happened to have him take my question and answer it. Serendipitous circumstances). “Think architecturally”

That is, I don’t think we’re going to train and hire our way out of the vulnerability debt that we’ve dug for ourselves with 100 million apps on the web being vulnerable. Ahem. That’d take a lot of analysis. And, at the usual cost of $300/hour, who’d have the resources?

I’ll offer that we have to stop talking about and to ourselves about the problem and get it in front of all the stake holders. Who are those?

• The risk holders (i.e., executive management)

• Our developer communities (SANS certification is certainly a start. But I think that security has to be taught as a typical part of proper defensive programming. Just like structuring code or handling exceptions, input must be validated.)

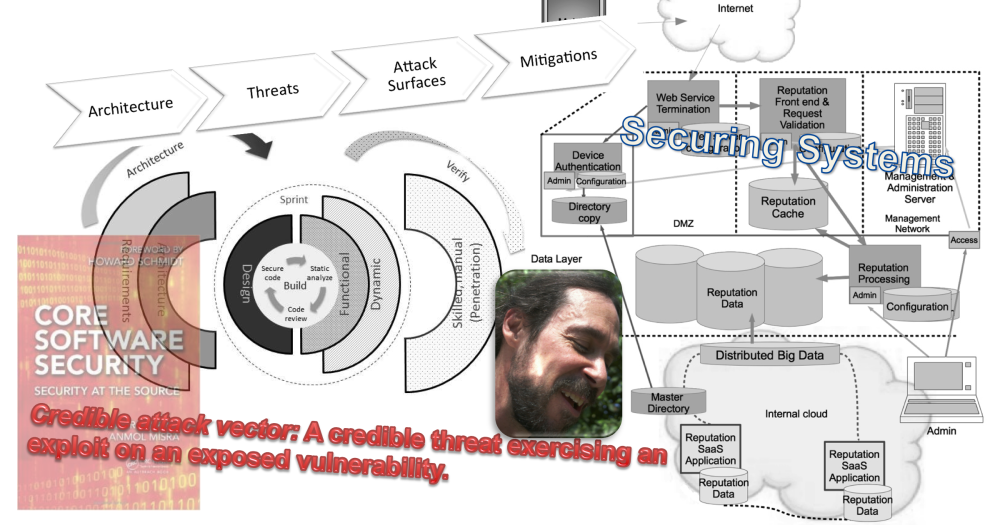

• Security as an aspect of system design and architecture. Not that cute box along the side labeled “security” in the logical architecture diagram. Rather, we need to treat each security control as components of the system, just like the other logical functions.

Which brings me back to the SANS Summit.

My sense of this summit is that we haven’t yet reached the tipping point. I didn’t speak to everyone there. But I spoke to a fair share (I won’t call it a sample. My conversations were hardly statistical in nature!)

Most folks with which I chatted were like me, in the trenches. We had a few formidable notables in the room, and, of course, I think there were a few beginners, as well. My guess is that most branches of the industry were represented: tool makers, consultants, Information security folk, with a sprinkling of governmental folk thrown in. The folks that I spoke to understood the issues.

If I could hazard a guess, the folks who made the time to come are the folks concerned and/or charged with, or making money out of, application security. In other words, the industry. We were talking to ourselves.

That’s not necessarily a bad thing. I’m guessing that most of us have been pretty lonely out there? There’s validation and solidarity (leftists, please forgive me for using that term here – but it does fit!) when we get together. We network. We test our ideas and our programs. That’s important. And, I heard a few really great ideas that were fresh to me.

But I think we need to take this out a good deal further, to a “tipping point” if you will, in order to move the state-of-the-art. And, I don’t think we’re there yet. Consider, if you will, how many programmers are writing web code right now?

What do you think?

cheers

/brook