“We sell Hammers” – Not Security!

“Several former Home Depot employees said they were not surprised the company had been hacked. They said that over the years, when they sought new software and training, managers came back with the same response: “We sell hammers.””

Failure to understand the dependence that we have not just on our obvious digital devices – smart phone, laptop, tablet, fancy fitness bling on your wrist – but also on a matrix of interconnection tying all these devices and billions more together – will land you in the hot seat. For about three billion out of the seven billion people on this planet, we have long since passed the point where we are isolated entities who act alone and in some measure of unconnected global anonymity. For most of us, our lives are not just dependent upon technology itself, but also on the capabilities of innumerable, faceless business entities acting on our behalf.

Consider the following, common, but trivial example.

When I swipe my credit card at the pump to purchase petrol, that transaction passes through any number of computation devices and applications operated by a chain of business entities. The following is a typical scenario (an example flow – but not the only one, of course):

- The point of sale device1, itself (likely supplied by a point of sale provider)

- The networking equipment at the station2

- The station’s Internet provider’s equipment (networking, security, applications – you have no idea!)

- One or more telecom company’s networking infrastructure across the Internet backbone

- The point of sale company or their proxy

- More networking equipment and Internet providers

- A credit card payment processor

- More networking equipment and Internet providers

- The card issuer who must validate the card and agree to pay the transaction for me

And so on…. All just to fill my tank up. It’s seamless and invisible – the communications between entities usually bring up an encrypted tunnel, though the protection offered is not as solid as you may hope – Invisible and seamless, except when the processing is not so invisible, like during a compromise and breach.

Every one of these invisible players has to have good enough security to protect me, and you, if you also use some sort of payment card for your petrol.

Home Depot, and Target before them, (and who knows who’s next?) failed to understand that in order to sell a hammer in the Internet world, you’re participating in this huge web of digital interconnection. Even more so, if you’re large enough, your business network will have become an eco-system of digital entities, many of whose security practices will affect your security posture in fairly profound ways. When 2 (or more) systems connect, each may affect the security posture of the other, sometimes in profound ways.

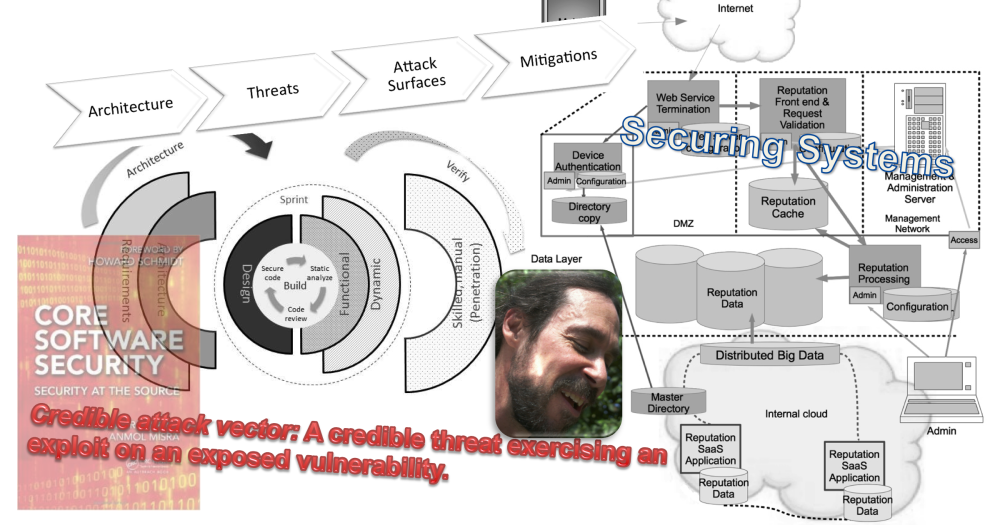

And there be pirates in them waters, Matey. As I wrote in the introduction to my next book, Securing Systems: Applied security architecture and threat models:

“…as of this writing, we are engaged in a cyber arms race of extraordinary size, composition, complexity, and velocity.”

One of the biggest problems for security practitioners remains that the cyber “arms race” isn’t just between a couple of nation-states. Foremost, the nation-state cyber war has to cross the same digital ocean that we use for our daily lives and digital entertainment. The shared web makes every digital citizen, potential “collateral damage”. But, there are more players than governments.

As can occur in a ground war, virtual “warlords” have private cyber armies marauding for loot, my loot, your loot. Those phishing spam don’t come from your friends, right3? Just trying to categorize the various entities engaged in cyber attacks could generate a couple of fine PhD theses and perhaps even provide years of follow-on papers? The number and varying loyalties of the many players who carry out cyber attacks increases the “size” of the problem, adds to the “composition”, and generates a great deal of “complexity”. It’s enough to make a well-meaning box-store retailer bury its collective head in the virtual sand. Which is precisely what happened to that hammer seller, Home Depot.

But answering the “who” doesn’t complete the picture. There’s the macro “how”, as well4. The Internet seems to suffer the “tragedy of the commons“.

In order to keep the Internet sufficiently interesting with compelling content such that we want to participate, it absolutely must remain neutral in character5. While Internet democracy certainly appears to be quite messy, the very thing that drives the diversity of content on the Internet is its level playing field6.

But leaving the Internet as an open field for all to enjoy means that some will take advantage of the many simply because the “pickings” are too rich to ignore. There is just too much to steal to let those resources lay untouched. And the pirates don’t! People actually do answer those “Nigerian Prince” scam emails. Really, someone does. People do buy those knock-off drugs. For the 3 billion of us who are digitally connected, it’s a dangerous digital day, every single day. Watch what you click!

In short, if you’re reading this on my blog site, you are perhaps an unwitting participant in that “cyber arms race of extraordinary size, composition, complexity, and velocity.” And so is every business that employs modern digital capabilities, whether for payments, or any other task. Failure to understand just how dependent a business is upon this matrix of digital interaction will make one a Target (pun intended). CEO’s, you may want to pay closer attention? Ignoring the current realities could cost you your job, perhaps even your career7!

If you think that you only sell objects and not some level of digital security, I fear that you are likely to be very sadly mistaken?

cheers,

/brook

- My friend and former colleague, Lucy McCoy, wrote the communications code in the first generation of gas pump payment terminals. At that time, terminals communicated via modem and phone line. She was a serial communications wiz. I remember the point of sale terminal laid out in her lab area. Lucy has since passed away. She was a brilliant engineer; she gave my code the best quality testing ever.

- The transactions have to get from station to payment processing, right? Who runs those cable modems and routers at the station? Could be the Internet provider, or maybe not. I run my own modem/routers/switches at home to which I have full admin access.

- I don’t know any spammers, as far as I know? Perhaps I make an unwarranted assumption that you don’t, either?

- The “what” and “why” of cyber attack seem pretty clear. Beyond attackers after money, they are after some other advantage: geo-political, business, just causes (pick your favourite or most hated cause), career enhancement, what-have-you. This is all pretty well documented. The security industry seems preoccupied with the “what”, i.e., the technical details of exploits. Again, these technical details seem pretty well documented.

- Imagine if your most hated or feared government had control over your Internet use, even the Internet itself, and proceeded to feed you exactly what they wanted you to know and prevented you from any other content. How would you like that?

- The richness and depth that is an emergent and continuing quality of the Internet, to me, demonstrates the absolute genius of the originators and early framers of the protocols and design.

- Of course, if I had a severance of $15.9 million, maybe I wouldn’t very much mind ending my career?